Neuromorphic and Deep Neural Networks

Disclaimer: I have been working on analog and mixed-signal neuromorphic micro-chips during my PhD, and in the last 10 years have switched to Deep Learning and fully digital neural networks. For a complete list of our works, see here. An older publication from regarding this topic is here (from our group).

Neuromorphic neural networks

Neuromorphic or standard digital for computing neural networks: which one is better? This is a long question to answer. Standard digital neural networks are the kind we see in Deep Learning, with all their success. They compute using digital values of 64-bits and lower, all in standard digital hardware.

There are many flavors of neuromorphic systems and hardware, which originated largely by the pioneering work of Carver Mead (his book cover on right). They most typically use 1-bit per value, as in spiking neural networks. They can compute in analog or in digital compute units. The main idea is that neurons are free-running and independent units, that are stimulated by the communication of spikes in the form of 1-bit pulses. Neuromorphic neurons can be both complex digital counters or simpler analog integrators.

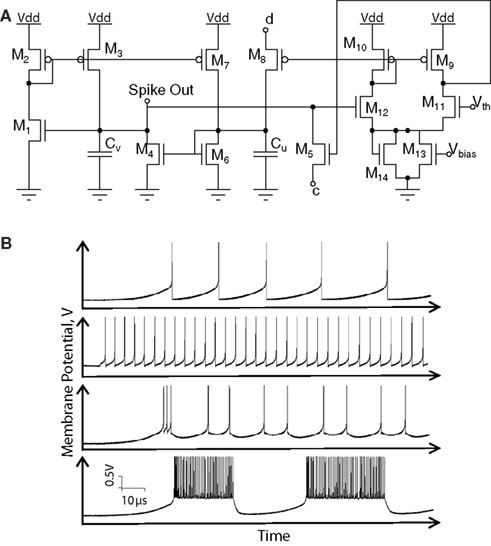

The Izhikevich neuron circuit, from here

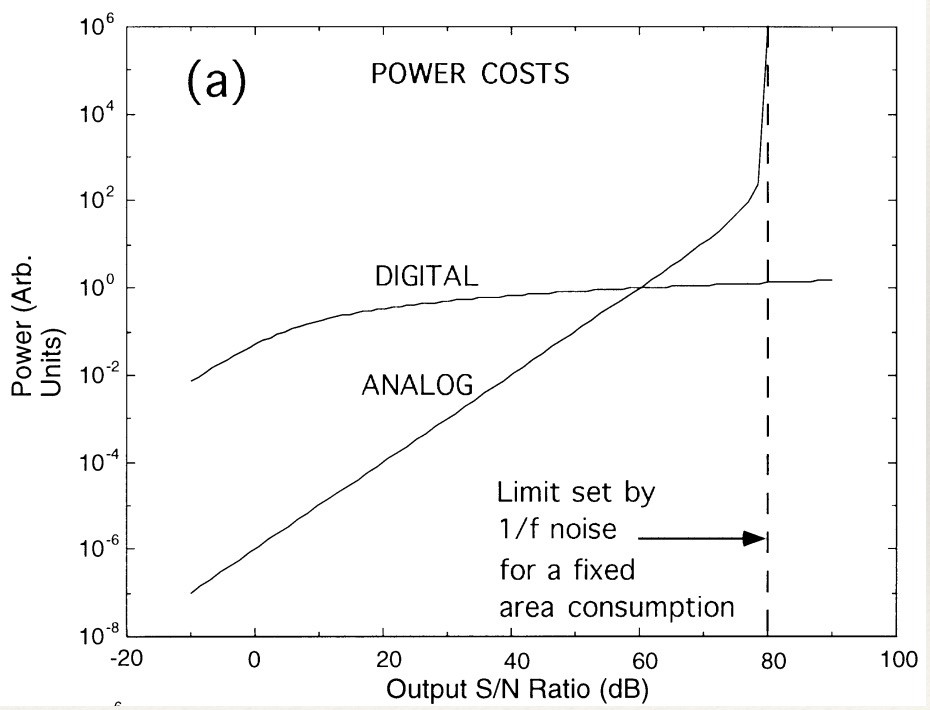

Communication is asynchronous and complex. Also it is a lot more energy efficient and noise-resistant to use digital communication, as you can see below.

Neuromorphic hardware in digital was designed by IBM (TrueNorth), and in analog by many research groups (Boahen, Hasler, our lab, etc.). The best system uses analog computation and digital communication:

Using conventional digital hardware to simulate neuromorphic hardware (SpiNNaker) is clearly inefficient, because we are using 32 bits hardware to simulate 1-bit hardware.

Neural network computation

Neural networks require multiply and add operations. The product of an MM matrix by a M vector require M² multiplication and additions, or 2M² operations. They also compute by convolutions, or artificial receptive fields, which basically are identical to matrix-vector multiplications, only that you multiply multiple values (filters) for each output data-point.

Neuromorphic system compute the same operations in a different way, rather than using B bit words for activations and weights, they use 1-bit spike communication from neuron to neuron. Neurons can send spikes on positive and negative lines to activate more or less neurons connected to them.Neuromorphic neurons integrate inputs in a digital counter or an analog integrator, and when a certain threshold is reached, they themselves fire another spike to communicate to other neurons.

Neuron arrays and neural activations: Neurons are organized in an array, and store activations in a memory inside each neuron. This is a problem, because neural network can be large and require many neurons. This makes neuromorphic micro-chip large because neurons cannot be re-used like we do in conventional digital systems. If one wants to re-use neuron arrays for multiple computations in the same sequence, then one has to use analog memories to store neural activations, or convert them to digital and store them into conventional digital memories. Neuromorphic problem: both these options are currently prohibitive. More below in: “Analog versus digital memories”.

Computing a neural network layer in neuromorphic arrays requires adding the weight value W to the neuron activations. This is simple: no multiplications. A digital multiplier uses L (~30–300) transistors per bit (lower bound: 12 transistors per flip-flop, 2 ff, one xor gate to multiply), while an analog may require just a handful transistor (1 to multiply, ~5–10 to bias circuit) to multiply ~5 bits (limited by noise) — this all depends on how weights are implemented. Neuromorphic computation wins big time by using much less power.

How are weight implemented? They can be digital or analog. Usually they are digital as analog memories fade during operation and would require large circuitry per weight to avoid leaking. Digital weight add an mount of activation proportional to their digital value.

Where are these weights stored? Locally there is limited storage capacity per neuron, but if that is not enough one has to resort to an external digital memory. More below in: “Analog versus digital memories”.

Neuromorphic system use asynchronous communication of spikes in a clock-free design. This allows to save power by only communicating when needed, and not at the tick of a unrelated clock. But this also complicates system design and communication infrastructure. More below in: “System design”.

Note: in order to get values in and out of a neuron array, we need to convert digital numbers (such as 8-bit pixels in an image) into spike trains. This can be done by converting the intensity value into a frequency of pulses. Ideally this conversion is only performed at input data and output of array.

Analog versus digital memories:

Neural network computation requires computing the product of an M*M matrix by a M vector. M is typically in the range of 128–4096.

Since a processor would have to compute multiple such operation in a sequence, it will need to swap matrices, relying to an external memory for storage. This requires a digital memory, since values are digital numbers.

An analog processor would have to convert values from analog to digital and back to perform operations on digital memories. There needs to be an AD/DA at each port from/to memory. These devices need to operate at memory speed, usually on the order of 1GHz, which is at the limit of current converters and requires very large amount of power.

Converting back and forth AD/DA at each layer is prohibitive. Therefore an analog neural network would have to perform multiple layers in a row in analog mode, which is noisy and degrades signals. Also this would require hardware for multiple layers, N layers by M*M hardware multiplier blocks need to be available. This requires large micro-chip silicon area / size.

In contrast, digital hardware can easily store and retrieve from digital memory, and hardware can be re-used, requiring less micro-chip silicon area / size. In addition, there is no over-head of data conversion.

System design:

Neuromorphic spiking networks use clock-less design, and asynchronous digital design. Few engineers are trained to design such systems.

Analog requires even more expertise and even fewer engineers are available.

Neuromorphic system tend to be more complicated to design than conventional digital ones. They are more error prone, require more design iterations, non-standard tools and micro-chip process variations.

Sparsity:

Some system can be superior in sparse computation domains, where instead of computing the entire 2*M² operations, they just need to compute a fraction of it. Note: in deep neural networks, inputs are not sparse (images, sounds), but can be sparsified by hidden layers. Many values are zero or very close, and can be highly quantized.

Let us assume here we may have no sparsity in the first 1–2 layers, and up to 100-x sparsity in some hidden layers.

There is no difference between conventional digital system and neuromorphic system, as both can perform sparse operations. We do need hardware that supports sparse matrix multiplications (not currently implemented in many deep learning frameworks and hardware)!

Final note:

Neuromorphic systems are still hard to design. To scale, they require analog memories or fast and low power AD/DA converters, which are currently not available.

But they can offer large energy savings is compute is in analog and communication uses inter-event timing.

About the author

I have almost 20 years of experience in neural networks in both hardware and software (a rare combination). See about me here: Medium, webpage, Scholar, LinkedIn, and more…