A picture is worth a 1000 words

Evolution of large language models into artificial brains through multiple sensory modalities

As surprising as it is our very human predictive abilities hide a path to understanding our intelligence. How do we solve so many tasks, learn so many new experiences, how do we so easily adapt to new environments?

The recent (2022–23) success of large language models (LLM) like ChatGPT and GPT-X paints a story. Trained to predict the next words in a sentence, these models showed superb language proficiency. The key ingredients are large amounts of data — more than you can read in a lifetime, and a simple predictive algorithm.

When we think of language we often forget it is an abstract representation of reality, of the world we live in, used to tag concepts and ideas of the real world into a set of labels we can use to communicate. But “the rose exist before its name”, meaning that any object in the real world, like a rose, is real even without a word for it. What is a “rose” then?

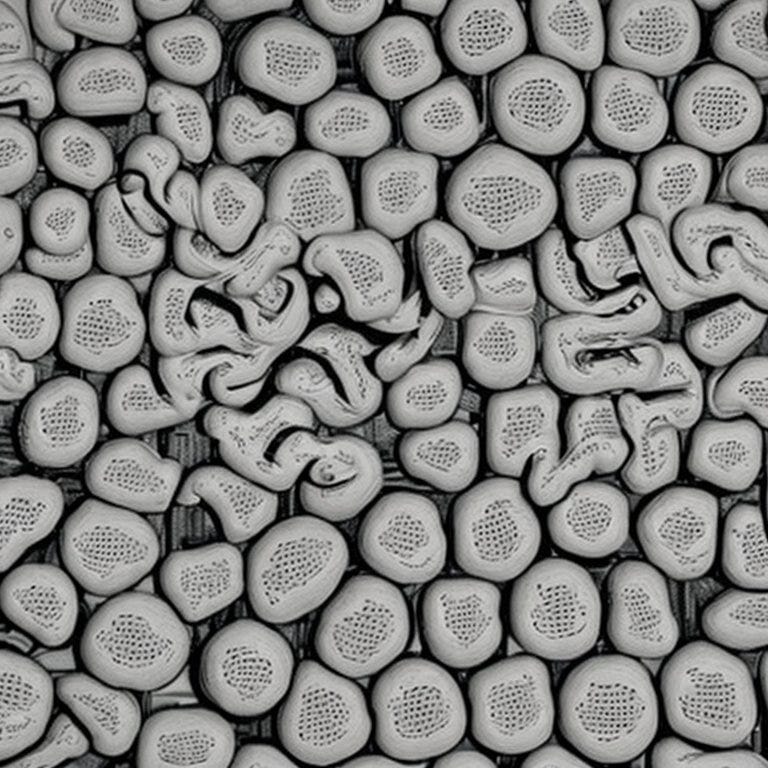

A picture is worth a thousand words

A concept in our environment is just a collection of data from our sensors. For us humans a rose is equivalent to its image, its perfume, its delicate touch, its movement in the wind. That “concept” is the essence of an object, or our qualia, our subjective ensemble of sensory information fused into one token of knowledge.

This is the future of artificial brains and the evolution of LLM: being able to represent “concepts” from their multi-modal information.

Multiple modalities

LLM are trained with text only, here instead we propose to use:

- visual information

- audio

- touch, proprioception

- text, tags, etc.

This requires the artificial brain to be embodied, or at very least have a fixed set of sensors that maintain consistency over the entire learning life.

Learning

Training these new multi-modal artificial brains uses powerful unsupervised learning algorithms. Or should we call these self-supervised? Since there is a undeniable need for data to train on, but not one that comes with labels.

How do learning algorithms evolve from predicting the next word in a sentence? There are multiple ways to predict concepts:

- predicting the next concept in a video or audio stream

- predicting what is around a concept in visual space

Self-supervision is in essence the search for sequential data by using time or by using data perturbation in space that occur naturally, such as noise masking in audio, occlusion in vision. Turning static data into sequence can be done by:

- manipulating an object, changing point of view

- listening to the concept multiple times

- masking portions of the data

See Note 1 below for additional comments.

Of course there is the problem of training cost and scale. Few institution can train models that cost many M$. For example GPT cost > 5M$ to train. We need a distributed and open system to create foundational models, one that is operated and serves a large majority of people. See this post also for more details.

The co-occurence of multiple sensory domains in space or time is another foundational learning algorithm that can be used to learn “concepts”. See this post and this post about this.

What makes these multi-modal models candidates for artificial brains?

Knowledge is organized in a graph where nodes are concepts and links form relationships between concepts. These links connect ideas and experiences into sets that are relationally-linked to each other, can summon and elicit each other.

This is not much different from the language models that arise from training an LLM. Language models are also a graph that connects words together and sets the rules for creating sentences, give rise to semantical meaning, group information.

We are fascinated by the 0-shot abilities of LLM today, their ability to perform tasks for which they were not explicitly trained for. This ability will be even more pronounced in multi-modal artificial brains because knowledge will be inherently connected across domains, allowing to reason about images and videos and audio, correlating speech to words, words to frames, lyrics to music.

A good definition of intelligence, after all, may very well just be our ability to connect concepts from multiple ares of knowledge without an explicit prompt.

Lifelong Learning

Ask an LLM what happened to the World Cup Soccer of 2022, and it will tell you that its factual knowledge stops in mid-2021. How do we keep artificial brains up-to-date? The same way we humans keep abreast of new knowledge: by continuing to learn.

It is easy to keep training a neural network, you just give it more samples every day. What is important to address in research and development is the drift in knowledge that can be caused by an unbalanced training. This happens for humans too, when environmental changes can lead knowledge and ideas to drift. Forgetting is also common when a set of knowledge is not refreshed for some time. Artificial brains will have the same and identical problems.

Using tools

An artificial brain will not need to become a clone of each and every tool. It will not need to memorize all Earth’s knowledge but rather be able to retrieve it. Not become a calculator, but rather learn to use one. Not dig holes, but rather drive tractors and machines.

By showing examples sequences of how to use a tool and how to extract knowledge from a website, as an example, is again an example of parallel with human training. Are these applications that can be built on top of these artificial brains? Yes most definitely, as the predictive ability of the model is able to learn a sequence of steps to accomplish goals. Again, similarly to training a human to use a tool.

The interesting thought that arises now is that these artificial brains are in fact starting to be closer and closer to a human brain, with parallels on curriculum training to incrementally increase abilities and knowledge.

Final notes

So yep — multi-modal, prediction, co-occurence. All ingredient of artificial brains implemented with neural networks. All this is the future you will see happen in just a few months.

The quick development of artificial brains that are becoming more and more capable is scaring a lot of people, as it happened before for any new and disruptive technology. Yet even if all of us wanted to use these models at large-scale, there are significant hurdles we need to pass. One is the cost of training deploying models. Two is the inability to share models and keep on training them while sharing the benefits. Three is the centralization of such model because of the cost and expertise needed to train them.

The rise of artificial brains will bring out at once all the problems we have in current neural networks: continual learning, training and inference efficiency, distributed learning, knowledge drift and forgetting, dataset biases, ethical use, toxic behavior, and more.

Much work to be done, between little and big steps forward. Are you ready to join us for the research ahead?

Note 1

One complication is that in a stream of concepts from a video, for example, predicting the next concepts does not mean predicting the next frame in a video, but rather predict how concepts morph in time. Prediction in video does not translate to fixed windows, but needs to understand when concepts changed “enough” from each other. A bit of a chicken-in-egg problem, isn’t it? But this is a good examples of the pitfalls of self-supervised learning and current methods in AI/ML. More insights are needed here, more research.

about the author

I have more than 20 years of experience in neural networks in both hardware and software (a rare combination). About me: Medium, webpage, Scholar, LinkedIn.

If you found this article useful, please consider a donation to support more tutorials and blogs. Any contribution can make a difference!