Artificial Intelligence in the year 2020: a new decade

We are at the end of a decade that will be forever know as the “Decade of Deep Learning and AI”. What lies ahead is exciting and will continue to change our lives.

The path of a new decade

Neural Networks, back-propagation, the promise of intelligent system, Artificial Intelligence (AI) — all have been in the making for many decades. But only with the computing power of the years after 2010, and with the large amount of datas produced by our cell-phones since the years after 2001 or so, neural networks actually became really useful in categorizing and learning from data.

Deep Learning and neural network were in the making by a handful of research groups and believers in the years 2008–2012 and before. But only after 2012 and precisely in 2014–2015 Deep Learning and neural network became popular. Since then we have seen them almost daily breaking records in:

- categorizing and captioning images

- identifying your face in images

- autonomous driving

- voice-enabled smart-home devices

And this is just to name a few applications, but there are a myriad more that we will never know, hidden in software we use every day, in the cloud, in our computers, in our appliances. And many more applications that use these algorithms to run the world we live in.

What follows are my own personal opinions on where Deep Learning (DL), neural network (NN) and machine learning (ML) is headed in the larger field of artificial intelligence (AI), and how we can get more and more sophisticated machines that can help us in our daily routines.

Please note that these are not predictions of forecasts, but more a detailed analysis of the trajectory of the fields, the trends and the technical needs we have to achieve useful artificial intelligence.

We will also examine low-hanging fruits, such as applications that are surely going to advance to products in the next decade.

Goals

The goal of Dl, ML, AI is to produce machines with abilities that go beyond human. Autonomous vehicles, smart homes, artificial assistants, security cameras are first potential applications. Home cooking and cleaning robots are a second target, together with surveillance drones and robots. Another one is assistants on mobile devices or always-on assistants. Another is full-time companion assistants that can hear and see what we experience in our life. One ultimate goal is a fully autonomous synthetic entity that can behave at or beyond human level performance in everyday tasks.

Below, I will describe the evolution in the next decade of:

- applications: what we have, what we can do, what we can dream

- neural networks: the tool that made this all possible

Applications

From the previous Goals section: artificial intelligence and neural network are our way to evolve machines to perform at the level of humans and beyond.

At the surface, we have accomplished a lot already with these tools, as one can see from the enormous amount of applications we have been able to progress on in just the last decade.

But since we are at the doorstep of a new decade, one can stop and think about what really have we enabled so far? Has our daily life changed? And what new applications would really change the way we live?

I look at our daily house activities … and to me it has not changed much for decades! Look at American movies from the 50s–60s, it mostly looks the same daily life! Same kitchen, where the microwave oven is one major innovation, same cars, albeit much safer and with more gadgets, same routines.

One big difference is the presence of mobile phones, which have replaced the television and radio sets and occupy much of people’s time.

And much of neural network innovation that can be experienced today is indeed inside these devices: cameras with neural network providing better pictures, more social applications, many of which are using neural networks to connect us in more ways, facial recognition software for our pictures and social circles, better web searching tools, and many more.

Outside cellular phones, the most important of all of neural network innovations so far are listed in the following numbered list:

talk to it! will it listen?

1- Voice-recognition assistants — are getting better and better at listening to our voice commands

one major upgrade to our lives from the last decades produced for us, are: the voice-enabled assistants

We can argue whether they are a major upgrade to our lives, but they at least are a new device in our home, one that we can talk to and interact with. They are still limited in many ways, and mostly lack the ability to create models of the people they converse with, they do not remember the history of conversations. Right now, they are only useful to play with, play music, jokes, stories and tell you about the weather, but that is going to change in the next decade. The major hurdle on the improvement of conversational systems is that we need neural networks capable of storing conversation information for a long time, develop a sense of different personalities that interact with it, and have the ability to generalize from the acquired knowledge to be bale to predict and assist future users requests. This requires major innovation in the neural network architectures that are not easy and are not quite here yet. More on Neural Networks section below.

home automation needs neural networks

2- Home automation: face-recognition, voice-enabled assistants, the promise of a smarter home. I used to joke in my early life that that we were going to build the “photonic house” a smart home for all our friends. But today I walk into a room and I still have to operate a light switch! A simple task like detecting ingress / egress and turning on a light automatically for us still is a challenge for home automation. We have garage-operated doors, we have wifi thermostats, we have voice-enabled switches and devices. But honestly today we all know these device do not provide much service…

today home automation are complex tech and gadgets that are not being useful around the house

I believe there is much more that we can do for home automation, and it starts with building a smart house brain, a bit like the conversational engine system reviewed above, but maybe a bit simpler. We still need models of each user, of each person living in the house. We still need to remember some of their history, of their preferences, but then we need to learn and generalize. Perform tasks for users, the house occupants, before they need it. In essence: predict their needs, which is what neural networks are for anyhow!

Some may argure that we need more sensors, but that is only part of the problem. A thermostat has a simple sensor that detects motion in front of it. The thermostat knows if there is someone in the house. But we can do better, we can have similar sensors installed in every door, so we know who comes and goes. We all carry a cellphone: this can be used at times to know who is who in the house. We have wifi switches and plugs. We just need a brain. What happened to the smart home-automation hubs? Lost in the fog of oversized corporations?

In terms of neural networks, this is interesting applied work to do: building a system that can identify multiple people and remembering a history of usage and interaction for a long time is something we can do today. Some of the elements here are similar to the conversation devices we mentioned above, but for home automation you only need to learn a simple language with a few verbs (turn light, on heat, open garage, etc), rather than entire human languages.

cameras are eyes for home automation

Of course in home automation there are the cameras! At a previous company I founded, we developed an entire home automation system based on smart cameras. Cameras augmented with face-recognition neural networks can recognize people, distinguish household member from others, detect people, pets, packages, cars, objects. Ultimately if can be eyes for a home-automation systems. And the challenges with cameras are many, some listed here:

- privacy: where is the camera, and are we ok with the camera monitoring certain areas?

- where is the camera video sent to? does it reside in the house or sent to the cloud? If in the house, is it protected from theft and damage?

- who processes the video from the camera for automation? A local server needs computing power and cost (maybe a PC with 1–2 GPUs), while a cloud computer is usually more expensive to run, has privacy issues, and requires streaming video over the internet = using significant internet bandwidth from the home cable connection.

Today smart cameras can recognize people, pets, and a few objects using cloud services. These are expensive and privacy is a concern. Also internet bandwith usage is high so only 1–2 cameras per household is really feasible (if you want to maintain HD streaming to 4 device in the house as typical).

As such cameras now have smart capabilities, but are not yet fully utilized, most likely because a good business model that is cost effective has not been identified. I believe having custom microchip that can process video inside the camera itself will reduce cost and open the market to more useful solutions.

feedback improves neural network

3- Feedback — The most complex problem we have in all the applications mentioned above is: how can user provide feedback to the system in the most natural way? When another fellow human being says something wrong, we can correct them with a sentence: “it is not X, it is Y” — this provides a sample for the neural system to learn and adapt. But providing feedback to artificial system is a large user-interface and system design problem. Voice-enabled assistants provide an example: many of us have tried to correct our assistants. It usually ends in gross disappointment with the capabilities of the system.

Maybe there should be common ways to report mistakes and errors: using a keyword: “error: it is not X it is Y” or something like this. This would provide manufacturers of the service an easy way to update their dataset. User customization is also very important, so the device needs to know who provides the correction, and save the new knowledge only for the specific user.

In overall providing feedback is a challenging area for correcting, advancing, and personalizing neural systems and will remain a challenge for the next decade.

Dude: where is my autonomous car?

4- Autonomous vehicles: we are all waiting for our digital chaperons, and much progress has been made — one can see the status of things in articles like this one. The major challenge for pushing the safety of vehicle beyond human capabilities is the ability of neural networks to generalize from a reduced set of curated and labeled road scenes to a seemingly infinite amount of circumstances that can occur on the road.

I argue that we need a new kind of neural networks for autonomous vehicles, one that not only can process the environmental inputs, but also dream or imagine what the results of actions will be. See predictive networks in neural networks section below.

5- Robots: from assembly lines to home robots the path of robotics gets lots. In assembly lines, powerful robot arms are able to do amazing feats: they can build entire cars! But there is a catch: they can only do it in a alost perfect environments, where items always look the same, where items are positioned in a precise way, where instruction and control is deterministic and predicted at deign and programming, not after.

the hope for a robotic cook in the house: maybe this decade?

Much different is the situation for a robot at home. Imagine we want to make a robot that can cut up an apple with a kitchen knife. The robot would have to locate the apple, which is placed in a random position on a bench (random because it has to be manipulated by both humans and robots, so uncertainty!), has to find the adequate way to grasp the apple and the knife, has to find an appropriate surface to cnduct the cutting task, has to operate the knife in slippering conditions, has to adapt to the variability in size of the apple, the location of its seeds, the size of is core, etc… you get the idea:

it is a nightmare of generalization

This is the same identical problem as autonomous vehicles, which are in fact one instance of modern robots coming in the next decade. We therefore again arguea new kind of neural networks is needed for robotics, one that not only can process the environmental inputs, but also predict what the results of actions will be. See predictive networks in neural networks section below.

The dream is that in the next decade robots will be able to operate in our homes, working with us without posing a threat or danger for any household member. They will be able to clean the house for us, not just undust floors with random motion algorithms (as the robotic vacuums we have today), but also undust any surface by lifing items. They will be able to load a dishwasher, and most importantly they will be able to cook like we do, by operating pots and utensils and raw ingredients.

Neural Networks

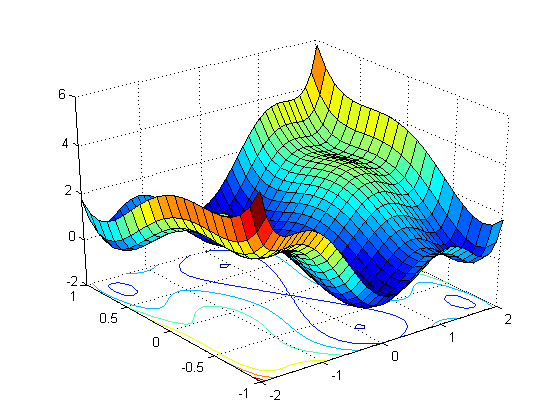

At the core of all the DL and AI revolutions are neural networks, an algorithm that takes inspiration from real brains and neurons and creates an artificial construct capable of learning to go from data to decisions. In order to train a neural network we define a dataset and a NN architecture (topology). Both will affect the way the algorithm will perform. Data is generally in the form of a long list of samples = [input, label], where we define a mapping between inputs and labels. The weight of the neural network will be shaped by the dataset, and will approximate a high-performance mapping between inputs and labels.

minimizing a loss function is easy, but creating one is hard

What is the goal of a neural network? When we train a neural network we have to identify its final goal. What is the network going to do? This has to do with a mathematical formulation of the goal usually called Cost or Loss Function. For categorizing image, for example, a cost function is the error between the predicted label and the right label. But for a robot cutting an apple the cost function becomes much more difficul to define. How can we create a function of how well the robot is doing? As humans it seems simple: we just need to see if the apple was cut in a similar way as we instructed the robot. But how can we turn this task into an equation that can be solved by gradient descent optimization algorithms?

I argue that the design of cost function for specific applications will be a major focus of the neural network research in the next decade. Few people are talking about this, maybe because they take for granted existing algorithms and solutions to simple or well defined problems. See all tools we have at our disposal today here, for an example.

And if we only provide examples and a fixed matehematical cost function, then how can a system learn even better and more efficienct ways to perform a task? How can, in over, words, become better than the humans that trained it? We would want that, for example in an autonomous car to learn super-human capabilities to avoid accidents, right? The solution is simple:

the cost function needs to be learned by the neural system

if simple if it is to write, is it not such to do. We need a lot of research in this area, and thinking outside the box, coupled with predictive neural networks and learning theories.

it predicts a great decade for neural networks

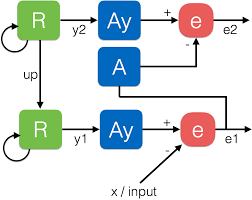

Predictive network: new kind of neural networks — We have talked about before on the limitation of neural networks as they are today. Cannot predict, reason on content, and have temporal instabilities — we need a new kind of neural networks that you can about read here. A major limitation of current neural networks is that they do not possess one of the most important features of human brains: their predictive power. This is what is needed for many applications: like autonomous cars and robotics. One major theory about how the human brain work is by constantly making predictions: predictive coding. If you think about it, we experience it every day. As you lift an object that you thought was light but turned out heavy. It surprises you, because as you approached to pick it up, you have predicted how it was going to affect you and your body, or your environment in overall.

Prediction allows not only to understand the world, but also to know when we do not, and when we should learn. In fact we save information about things we do not know and surprise us, so next time they will not! And cognitive abilities are clearly linked to our attention mechanism in the brain: our innate ability to forego of 99.9% of our sensory inputs, only to focus on the very important data for our survival — where is the threat and where do we run to to avoid it. Or, in the modern world, where is my cell-phone as we walk out the door in a rush.

Building predictive neural networks is at the core of interacting with the real world, and acting in a complex environment. As such this is the core network for any work in reinforcement learning. See more below.

We have talked extensively about the topic of predictive neural networks, and were one of the pioneering groups to study them and create them. For more details on predictive neural networks, see here, and here, and here.

Continuous learning — this is important because neural networks need to continue to learn new data-points continuously for their life. Current neural networks are not able to learn new data without being re-trained from scratch at every instance. Neural networks need to be able to self-assess the need of new training and the fact that they do know something.This is also needed to perform in real-life and for reinforcement learning tasks, where we want to teach machines to do new tasks without forgetting older ones. Continuous learning or life-long learing also ties in with transfer learning, or how do we have these algorithms learn on their own by watching videos, just like we do when we want to learn how to cook something new? That is an ability that requires all the components we listed above, and also is important for reinforcement learning. See our summary of recent results.

Reinforcement learning — this is the holy grail of deep neural network research: teach machines how to learn to act in an environment, the real world! This requires self-learning, continuous learning, predictive power, and a lot more we do not know. There is much work in the field of reinforcement learning and we already talked about this here and more recently here.

Reinforcement learning is often referred as the “cherry on the cake”, meaning that it is just minor training on top of a plastic synthetic brain. But how can we get a “generic” brain that then solve all problems easily? It is a chicken-in-the-egg problem! Today to solve reinforcement learning problems, one by one, we use standard neural networks:

- a deep neural network that takes large data inputs, like video or audio and compress it into representations

- a classifier or sequence-learning neural network to learn tasks

Both these components are what everyone uses because they are some of the available building blocks. Still, results are unimpressive: yes we can learn to play video-games from scratch, and master fully-observable games like chess and go — this year even trained overnight! — but I do not need to tell you that is nothing compared to solving problems in a complex world and machines that can operate like us.

We believe that predictive neural networks are indispensable for reinforcement learning and the next decade will definitely solve many of the challenges we face today.

No more recurrent neural networks — recurrent neural network (RNN) are falling out of vogue. RNN are particularly bad at parallelizing for training and also slow even on special custom machines, due to their very high memory bandwidth usage — as such they are memory-bandwidth-bound, rather than computation-bound, see here for more details. Attention based and especially convolutional neural networks are more efficient and faster to train and deploy, and they suffer much less from scalability in training and deployment.

We have already seen that convolutional and attention based neural network are going to slowly supplant speech recognition based on RNN, and also find their ways in reinforcement learning architecture and AI in general.

wee need new neural networks all the time — see here

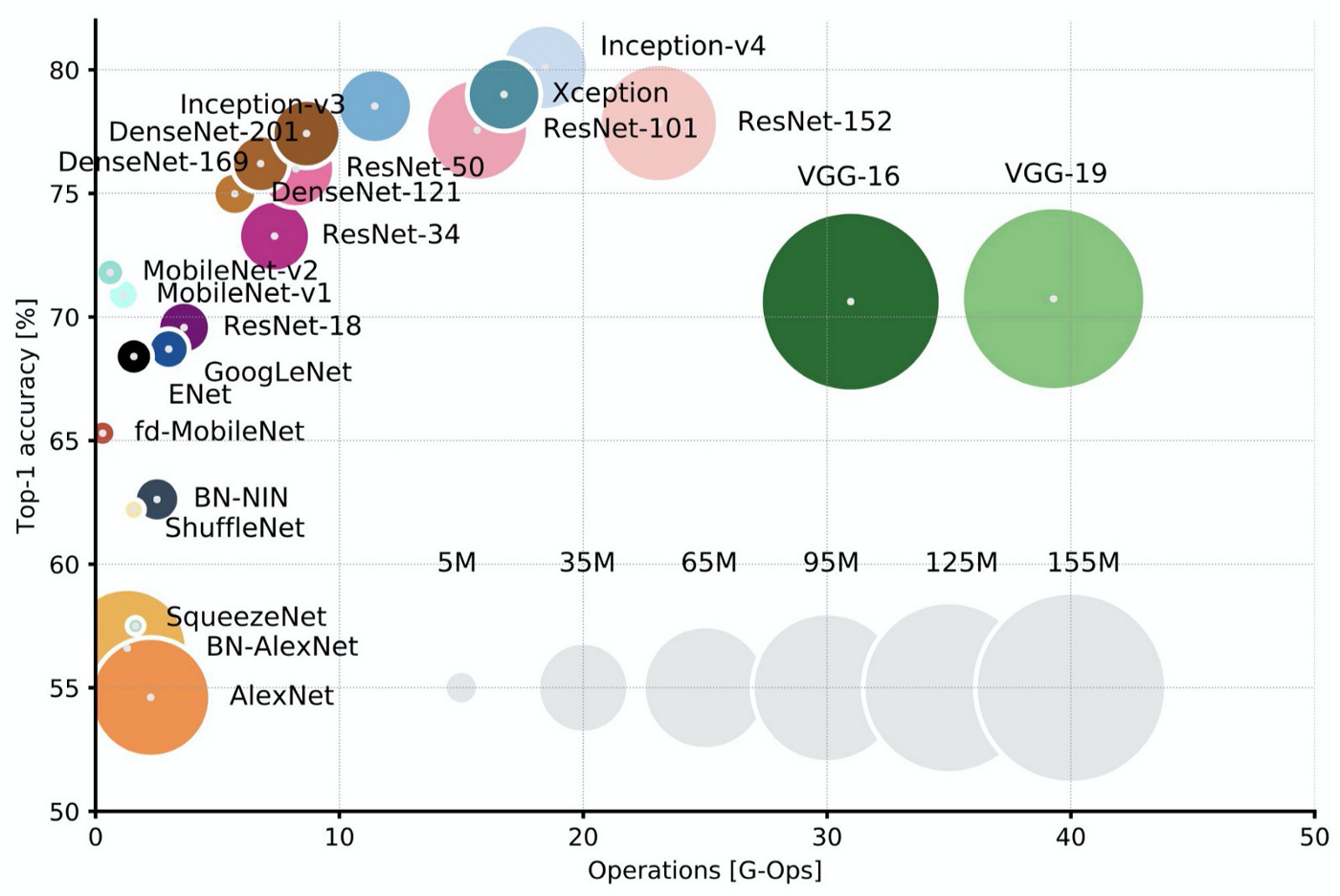

NN Architectures — But what about the neural network architecture? Who defines that? “We do”, it turns out! The last decade has seen by far the results of architectures crafted by human brains, a skill based on experience and a bit-of-luck! The last few years have seen a rise of algorithmically-created neural network architectures. This is better than relying on human experience or luck, but the techniques still rely on just a handful of pre-selected modules, and are not yet able to efficiently map the huge space of possible topologies. The next decade will surely see an increased research activity in this area, and most likely will reduce the dependence on human design of NN architectures.

New NN — there is always the promise of new neural network algorithms. Spiking networks, reservoir networks, oscillating networks, and more. I believe these will remain an area of research for a few stubborn groups, and after so many years we have yet to see any of the promises materialize. We should keep an eye for new types of NN that can offer demonstrations as: categorize images, transcribe speech and possibly drive your car, before committing to a review. See this commentary also.

This blog post will evolve, like our algorithms and our machines. Please check it again soon.

About the author

I have almost 20 years of experience in neural networks in both hardware and software (a rare combination). See about me here: Medium, webpage, Scholar, LinkedIn, and more…

Note: this is a collection of personal thoughts, not the view of my employer.