Adversarial predictive networks

By Lukasz Burzawa, Abhishek Chaurasia and Eugenio Culurciello

We wanted to test the idea that predictive neural network like CortexNetwould benefit from simultaneously training to:

1- predict future frames in a video

2- use adversarial training to distinguish between real frames in a videos and the ones generated by the network

We call this Adversarial Predictive Training. The idea is to improve on the capability of CortexNet to pre-train on more unlabeled data and then use only small amounts of labeled data.

We modified our new kind of neural network in this way:

we added a classifier C to predict fake vs real generated frame. Our network discriminator or encoder D is like a standard multi-layer neural network, and so we place the classifier C after all green layers. The blue generative or decoder layer instead are useful to reconstruct future images or representation. More details is here.

In order to train we use the following steps:

1- we train the network to predict next frames just as in a regular CortexNet

2- once the network can generate decent future frames, we train classifier C to predict whether the input was a real next frame or a generated next frame

We run steps 1,2 in sequence at every training step.

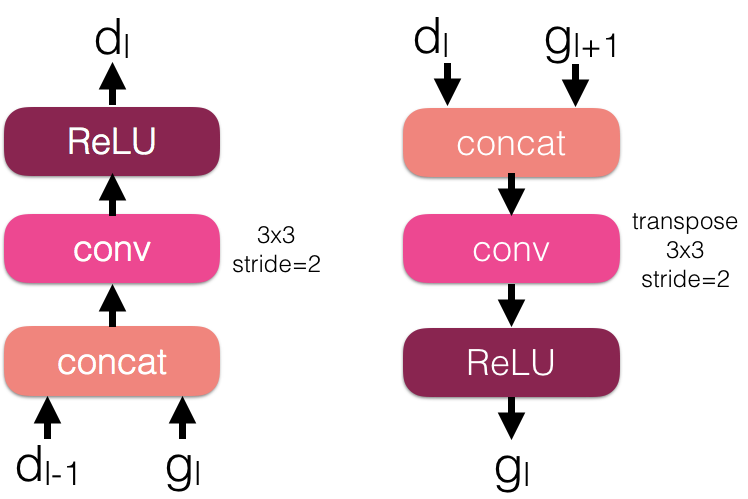

These are the G and D blocks in detail:

D, G blocks in detail

We use batch normalization between conv and ReLU.

Results: using the KTH dataset, we pre-train the network with the adversarial predictive method described above, and then used 10% of the data to train the classifier C by freezing the network of the generator.

CASE 1

CASE 2

Case 1: we trained the entire network supervised on the 10% of data. We get a max testing accuracy of 60.43%

Case 2: we used Adversarial Predictive training (fake/real and also predict next frames) on 100% of data, then fine tuned the network on 10% of data. We get a max testing accuracy of 53.33%

CASE 3

Case 3: we used predictive training only (predict next frames, as the original CortexNet) on 100% of data, then fine tuned the network on 10% of data. We get a max testing accuracy of 71.98%

Conclusion: as you can see we expected the Case 2 to be better than the case 3. But it did not happen: (53 vs 71% — case 2 vs 3). Our conclusion is that Adversarial Predictive training produces a conflict between training the classifier on classification of fake/real and the predictive capabilities of the entire network.

Pre-training for 33% (62 vs 79% — case 2 vs 3) and 50% (71% vs 81% — case 2 vs 3) of the data, instead of only 10% did not change the situation, and using more data defeats the purpose of pre-training on unlabeled data anyway…

About the author

I have almost 20 years of experience in neural networks in both hardware and software (a rare combination). See about me here: Medium, webpage, Scholar, LinkedIn, and more…

Donations

If you found this article useful, please consider a donation to support more tutorials and blogs. Any contribution can make a difference!