Analysis of deep neural networks

By Alfredo Canziani, Thomas Molnar, Lukasz Burzawa, Dawood Sheik, Abhishek Chaurasia, Eugenio Culurciello

This are updated figure from the paper:

An Analysis of Deep Neural Network Models for Practical Applications, by Alfredo Canziani, Adam Paszke, Eugenio Culurciello.

The new figure include recent models as:

- Shufflenet

- Mobilenet

- Xception

- Densenet

- Squeezenet

Refer to our blog post on neural architectures for more details.

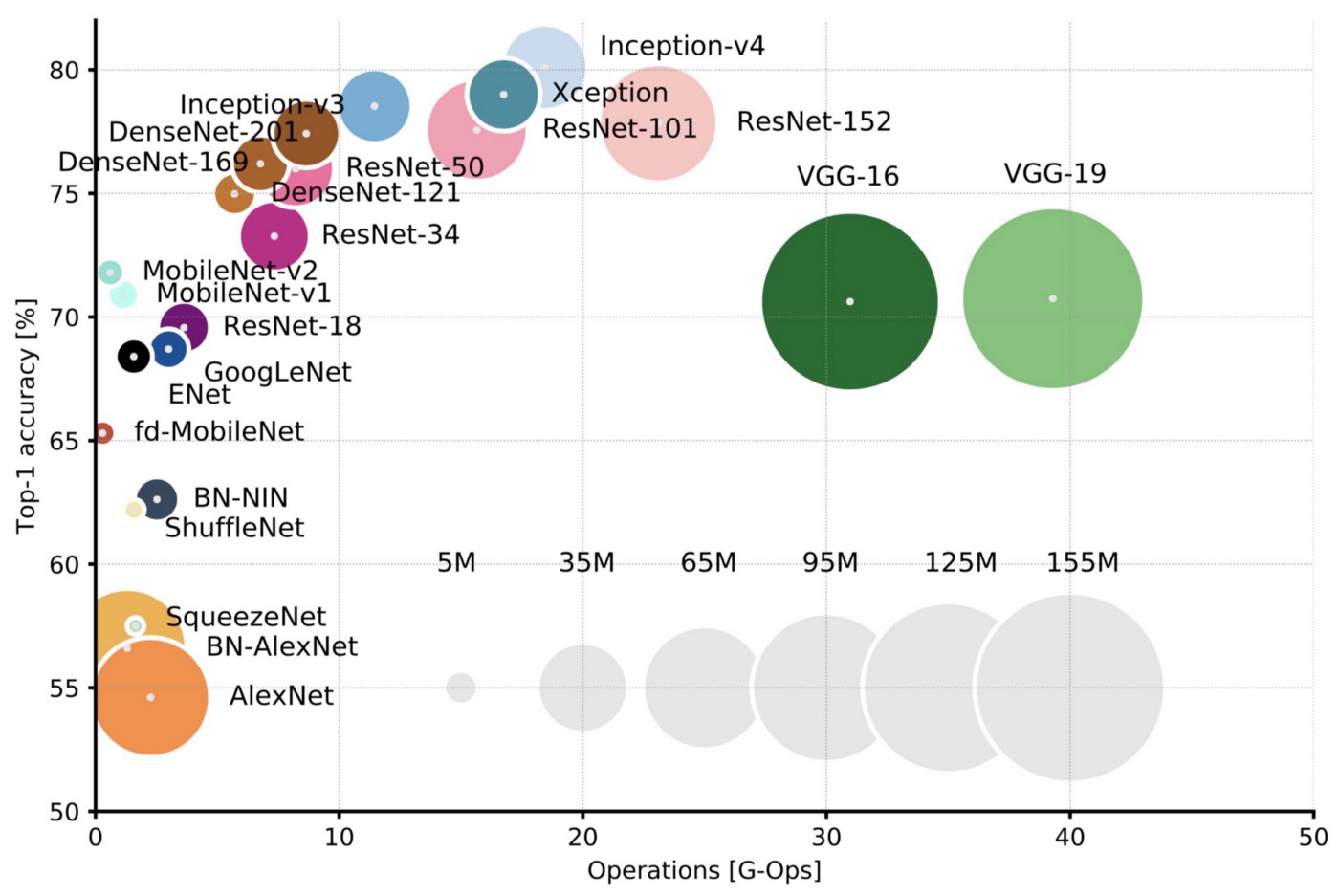

updated Figure 2: Top1 vs. operations, size ∝ parameters. Top-1 one-crop accuracy versus amount of operations required for a single forward pass. The size of the blobs is proportional to the number of network parameters; a legend is reported in the bottom right corner, spanning from 5×10^6 to 155×10^6 params. Both these figures share the same y-axis, and the grey dots highlight the centre of the blobs.

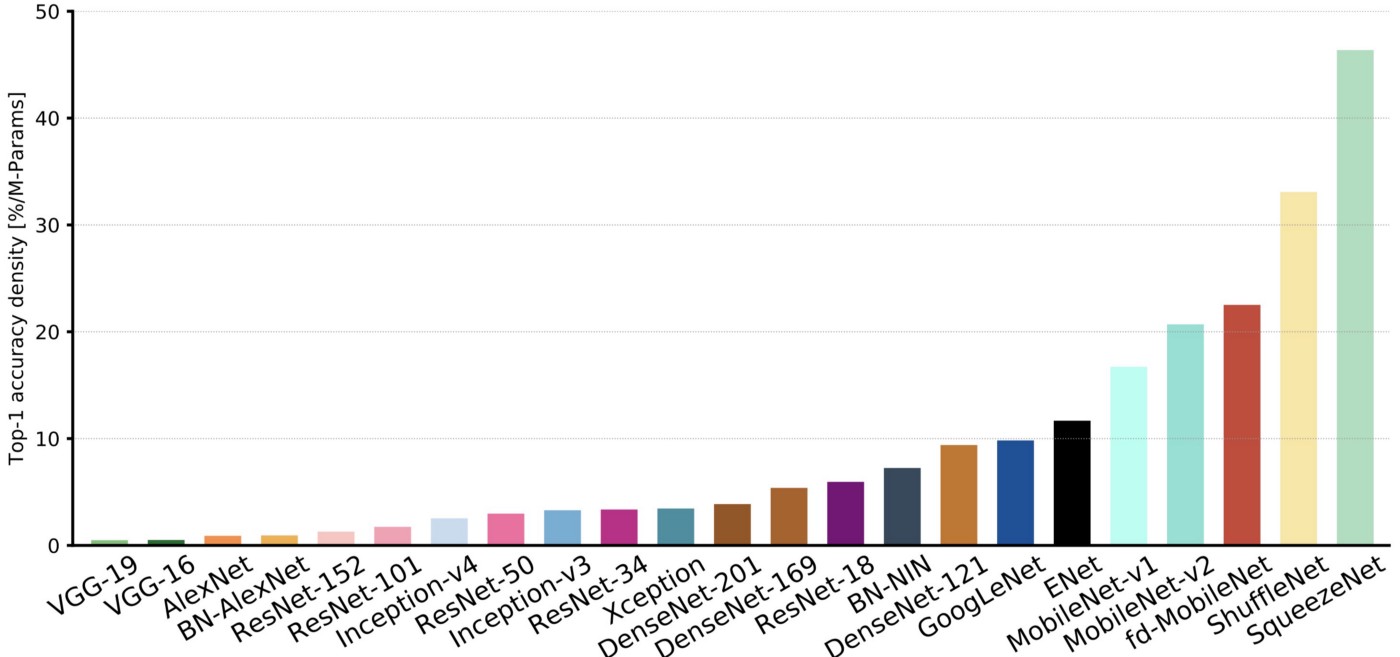

updated Figure 10: Accuracy per parameter vs. network. Information density (accuracy per parameters) is an efficiency metric that highlight that capacity of a specific architecture to better utilise its parametric space. Models like VGG and AlexNet are clearly oversized, and do not take fully advantage of their potential learning ability. On the far right, ResNet-18, BN-NIN, GoogLeNet and ENet (marked by grey arrows) do a better job at “squeezing” all their neurons to learn the given task, and are the winners of this section.

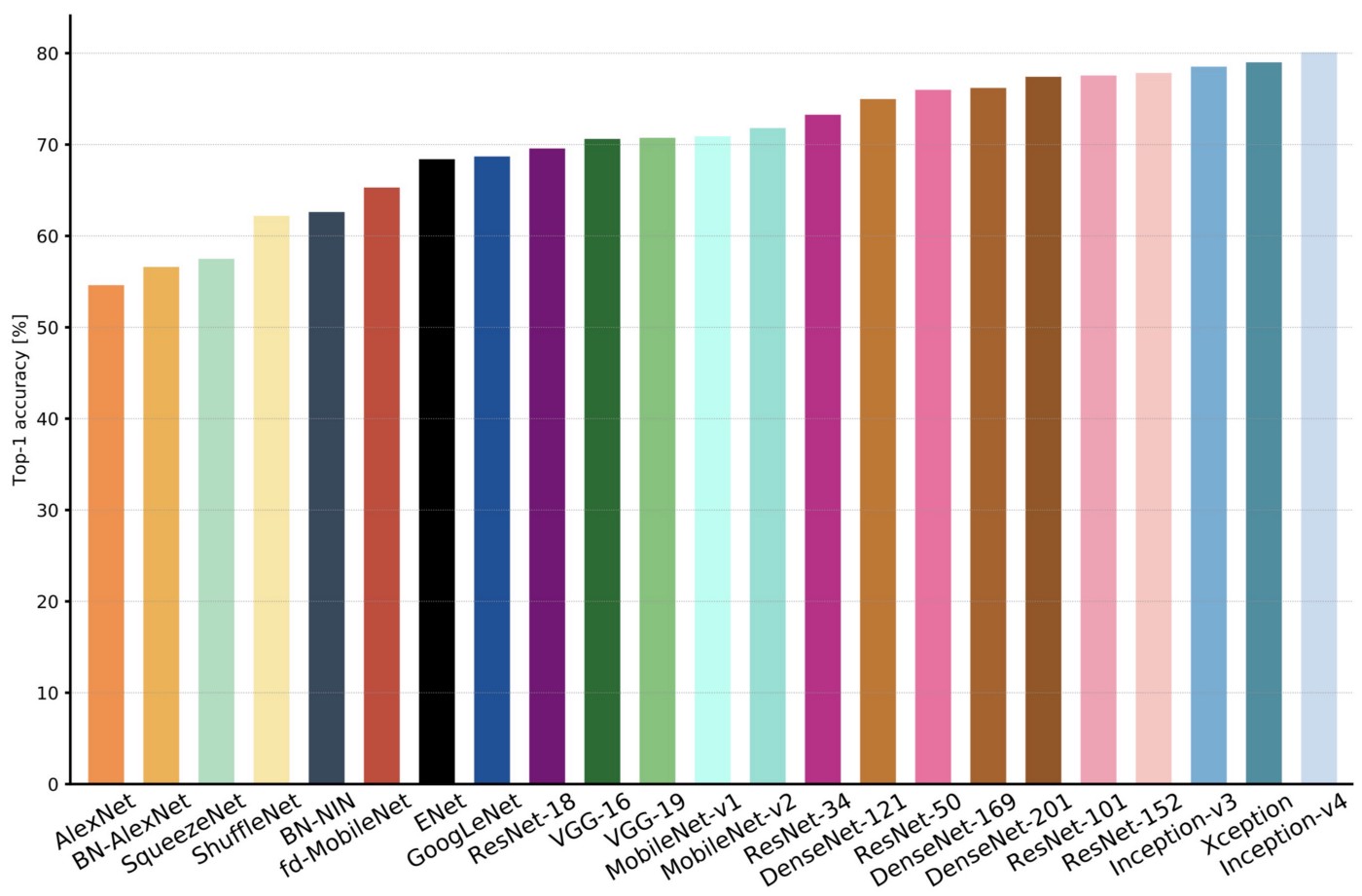

updated Figure 1: Top1 vs. network. Single-crop top-1 validation accuracies for top scoring single-model architectures. We introduce with this chart our choice of colour scheme, which will be used throughout this publication to distinguish effectively different architectures and their correspondent authors. Notice that networks of the same group share the same hue, for example ResNet are all variations of pink.

Note: if you plan to use these figure in any form in your talks, presentations, articles, please cite our paper and blog.

About the author

I have almost 20 years of experience in neural networks in both hardware and software (a rare combination). See about me here: Medium, webpage, Scholar, LinkedIn, and more…